The Craziness and Challenges in Today’s AI Market

The novelty effect of AI that started with ChatGPT’s launch in November 2022 is winding down. Yet massive AI investments are still ramping…

The novelty effect of AI that started with ChatGPT’s launch in November 2022 is winding down. Yet massive AI investments are still ramping up… Alphabet (Google), Amazon, Meta, and Microsoft are expected to spend $210 billion — roughly 20% of Spain’s GDP and 66% of the SaaS market — on AI through the rest of 2024.

This apparent misalignment is making many, including the likes of Sequoia Capital and Goldman Sachs -and the markets in the last few days — question whether there’s an AI bubble. Will the investment in AI be justified? Or will we soon see a dramatic slowdown in capacity buildup and general AI interest?

I believe the outcome of the bubble question depends on whether AI’s value increases before a significant write-off event occurs. Additionally, the non-linearity and exponential nature of AI’s growth across multiple dimensions in use cases and complexity makes forecasting the growth very challenging.

A major write-off event could be triggered by several factors, such as market corrections affecting top tech companies, defaults on the billions of dollars companies are borrowing to create GPUs as a service, or even the accelerating depreciation curves of Nvidia’s new generation deployment cycles.

My take is not a prediction. In fact, I don’t like or trust expert predictions on almost any topic, as they’re generally wrong. Instead, I publish because this kind of writing helps and forces me to dive deeper and be more rigorous in research. This is simply my attempt at making sense of the AI news and distilling it down to what I believe are its first principles.

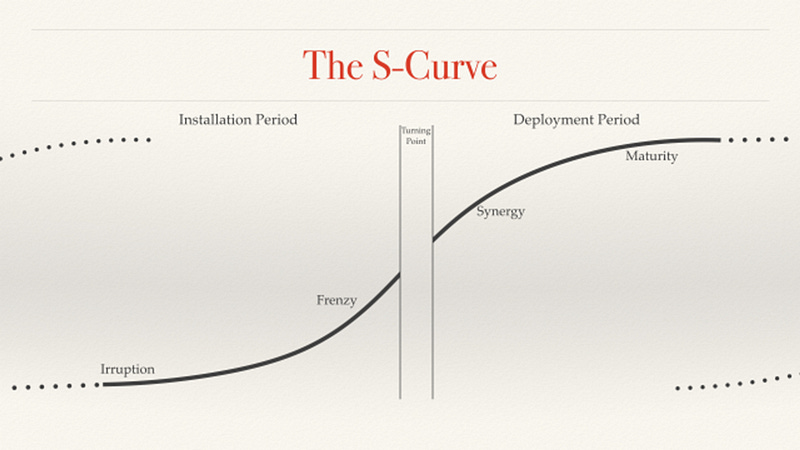

The AI S-Curve

We could spend hours debating the potential of AI, but academic literature tells us that the adoption and value created by every new technological wave come in an S-shaped curve. Specifically, I find Carlota Perez’s interpretation of the curve to be a very good framework (that is not a theory) to decompose what’s happening.

Irruption: GenAI Comes of Age

In Carlota’s framework, every new technological wave (or techno-economic paradigm as she calls them) starts when several important technologies converge, creating a general-purpose technology with the potential to transform the economy. Think about the Industrial Revolution (1771), the Age of Steam and Railways (1829), the Age of Oil and Mass Production (1908), and the Information Technology Revolution (1971).

GenAI’s rise stems from decades of technological advancements and AI research since the 1970s. The personal computer, the internet, mobile computing, and cloud computing — along with research in Neural Networks, Deep Learning, and transformer architecture, set the foundations.

Simply put, these technologies have provided the two essential elements — compute and data — LLMs needed to captivate our imagination.

Frenzy: AI’s Novelty and Hype

The second phase in the framework is Frenzy. The frenzy begins when the technology matures and leaves the labs to enter the hands of the industry.

November 30, 2022. That was the day OpenAI launched ChatGPT — and when GenAI’s frenzy phase began. Overnight people went from thinking Alexa was the closest thing to AI — which barely puts music correctly- to having fully fleshed conversations with ChatGPT, Luzia, and other assistants that emerged. 🤯🤯🤯

At this point, argues Carlota, financial capital is poured into “the new thing” and a phase of intense innovation (craziness) and infrastructure development begins.

An AI race.

“When we go through a curve like this, the risk of underinvesting is dramatically greater than the risk of overinvesting for us here, even in scenarios where if it turns out that we are overinvesting.”

Sundar’s feelings about the CapEx investment — quoted from the 2024 Q1 earnings call — reflect the industry’s general sentiment.

Alphabet, Amazon, Meta, and Microsoft are projected to spend around $200 to $210 billion combined on AI-related CapEx in 2024 — a staggering 38% increase year-over-year. This massive investment will go towards GPU acquisition, data centers, and energy infrastructure to support AI operations.

This spending spree will likely continue as long as the scaling laws hold true (more compute + more data = better models). Essentially, the idea is to throw money at the problem and produce better models. As long as these scaling laws don’t hit a ceiling, this sets the stage for an arms race toward artificial general intelligence.

Leopold Aschenbrenner, a former OpenAI alignment team executive, suggests that if this trend continues, we could see trillion-dollar clusters by 2030. Is Leopold’s forecast hard to believe? Not really. It might be complicated to grasp, but it’s definitely possible — and already happening.

Microsoft and OpenAI are working on a $100 billion cluster. Amazon bought a nearly 1-gigawatt data center campus connected to a nuclear plant. Elon Musk is firing up the largest cluster in the world with 100,000 H100 GPUs in a single location (7X Microsoft’s current location).

Mark Zuckerberg has acquired 350,000 H100s (at $25,000 to $30,000 each, you can do the math). And Spain is launching the second-largest data center with the capacity to read 12,000 Quixotes per minute (the equivalent of seven H100s — which you could rent for <$300 a day).

Jokes aside, this is clearly happening. But just like in every movie, there’s always a dramatic moment… This is what Carlota calls the Turning Point.

The Turning Point

The Turning Point occurs with the bursting and retreat of financial capital, potentially leading to a recession. Financial capital, being more speculative, steps aside to make space for production capital, which is more practical and economically impactful. This shift moves innovation from radical to predictable, leading to the widespread adoption of technology and a focus on existing use cases.

Financial Capital vs. Production Capital

Currently, financial analysts and Wall Street are starting to question the ROI of AI CapEx, calling for a CapEx reset. CapEx reset would involve a write-off of the infrastructure investment, making ROI easier to achieve (a smaller denominator in the ROI equation), albeit at the expense of significant losses (ejem). Meanwhile, industry leaders believe in the technology’s long-term value and see no need for a reset.

The bubble bursts if we don’t generate meaningful revenue on time

History has a tendency to repeat itself, and while its predictive power is not always strong, it often outperforms expert predictions (this paper looks at the predictive power of history).To understand what forces are at play and whether a frenzy will end on a burst we need to first understand what we mean by bubble.

What do we mean by a bubble?

In the context of the AI bubble, we can define it as a scenario where there is no convincing reason to believe that the industry will generate enough economic value per token in a reasonable timeframe to justify the massive CapEx investments. Once again, time and money.

The Timing Perspective

Overnight success can take a while.

Timing dictates how long we have to figure out how to generate revenue from AI.

Let’s be honest, revenue from AI today is mostly experimental or nonexistent. Perez’s framework suggests it takes time to figure out product-market fit for a new wave of technology. However, CapEx investment is necessary to create space for experimentation and disruption. H100s are typically depreciated over a five-year period — which, in accounting and business terms, sets our timeframe. However, from this perfect reality on which most economic calculations are based, three things can happen that accelerate this depreciation.

Either hardware evolves faster than anticipated — old hardware becomes obsolete- , software improves — existing hardware is used more efficiently — or there is a catastrophic blow up — that completely dries the market.

Hardware Technology Changes

Technology evolves fast, especially in the realm of GPUs. Nvidia is pushing the envelope with its next-generation Blackwell architecture GPUs, moving to yearly refreshes and making a ton of money along the way.

But as Jeff Bezos famously said, “Your margin is my opportunity.” A new-generation chip from a startup or big tech company could completely shake up the landscape and accelerate the depreciation of the hundreds of billions invested in Nvidia chips.

This scenario might seem unlikely. A lot needs to happen for it to become a reality, given the network effects in the chip world. But let’s not kid ourselves — Nvidia isn’t invincible. (No one is. Remember BlackBerry?) Nvidia’s ecosystem is a double-edged sword. It’s their strength now, but it could become a weakness if open standards gain traction. And don’t forget the big tech giants are cooking up their own AI chips. If Alphabet, Amazon, Microsoft, or a startup such as Groc cracks the code, Nvidia could be in for a rough ride.

Software Technology Changes

The “Attention is All You Need” research paper from Google catapulted the deep learning architecture of transformers into the spotlight, starting a revolution in AI that quickly reached escape velocity.

Now, academia (which is becoming less and less relevant) and the industry as a whole are racing to uncover the most efficient architectures and innovations that push the boundaries of what’s possible. They’re looking to unlock the full potential of these models with the lowest GPU consumption — just look at the Phi family model from Microsoft, mighty small super performing models.

This pursuit of efficiency and power in transformer architecture presents a fascinating dichotomy.

On one hand, we might witness a “highway effect,” where increased capacity leads to even greater demand and utilization — much like how adding lanes to a highway often results in more traffic rather than less congestion. In the AI world, this could manifest as more powerful models being applied to increasingly complex tasks, continuously pushing the limits of what’s achievable.

On the other hand, we could encounter a “Not In My Back Yard” (NIMBY) effect, where excess capacity paradoxically diminishes perceived value. In the context of AI, this might translate to a saturation point where further improvements in model efficiency or power result in diminishing returns. This could potentially shift focus away from raw capability to more nuanced aspects like interpretability, fairness, or specialized applications.

A Catastrophic Blow-Up

Finally, the most unlikely scenario: a catastrophic blow-up. What if somewhere, someone with hundreds of thousands of H100s screws up and is forced to write off a significant portion of the H100s they acquired? Could that cascade over the rest of the industry as financial crises have in the past?

Let’s imagine one possible scenario. Let’s say that CoreWeave, having borrowed $2.3 billion against H100s, can’t repay its debt, and the H100s are foreclosed.

Although all the details aren’t public, the information suggests CoreWeave has a three-year repayment period. That would require around a 50% utilization rate to break even on the H100s.

Can CoreWeave achieve this utilization rate? Yes. Risky? Absolutely, especially in a highly deflationary industry, where consumer prices drop while the cost of training the latest models increases almost by an order of magnitude for every new generation. In this context, infrastructure companies are fighting to the nails for users, and once supply bottlenecks have been solved (more or less), price is the critical competing factor

If the underwriters of the debt need to foreclose on them, we may see a cascade effect.

(In all honesty, in the event of a massive write-off, I don’t know if the current level of investment in AI could have a direct impact on the broader economy. It feels odd — but that’s not the point I want to make here.)

The Revenue Perspective

David Cahn from Sequoia Capital estimates a $600 billion gap in AI revenue, up from $200 billion back in September 2023. His calculations are straightforward: The end-user value must cover the value generated across the entire chain. Otherwise, the CapEx investment isn’t justified.

For example, take Nvidia’s run-rate revenue forecast and double it to account for the total cost of AI data centers. (GPUs are half the total cost of ownership, with the other half including energy, buildings, backup generators, etc.)

Then, double it again to reflect a 50% gross margin for the end-user of the GPU.

In line with this, MIT economist Daron Acemoglu argues that AI will only contribute about 1% to GDP output over the next decade. This translates to roughly $330 billion for the U.S. And if we conservatively double that for a worldwide estimate, it barely covers the $600 billion gap discussed earlier. And that’s only for this year’s forecast! So, these calculations clearly fall short if growth gets us to a $1 trillion data center.

Acemoglu offers two arguments for this. First, he estimates that only about 25% of automatable tasks will be cost-effectively impacted (e.g., for replacing a call center agent, the cost of GPT-5 must be less than that of the agent). Second, he is skeptical about scaling laws — more data and compute doesn’t necessarily mean better models.

Both arguments boil down to the idea that AI doesn’t progress fast enough in quality and efficiency to justify the investment. However, this perspective misses a crucial point in understanding how value is unlocked.

The Stagnation Argument

A stagnation thesis assumes scaling laws are broken. Models aren’t progressing at the promised speed, concluding that there’s no justifiable revenue in the foreseeable future.

Since the emergence of new AI capabilities is unpredictable, this can seem true.

The stagnation camp often points to the lack of significant progress since the launch of GPT-4 in March 2023 as evidence.

Multitask Language Understanding evolution over time (Source)

- YouTube

Enjoy the videos and music you love, upload original content, and share it all with friends, family, and the world on…www.youtube.com

It’s true that progress hasn’t been as quick as in previous years. But improvement is still happening at a breathtaking pace.

On the other hand, we have those in the industry camp. They find no reason to believe the scaling laws are broken (Ilya, Dario, Sam), and thus are touring investors, funds, and even governments to get the money they need to pour into the problem to achieve the next level of value. Their argument is simple i) progress is happening and ii) if it’s not happening faster is because of a lack of resources.

And the data is in their favor. Since GPT-4 (which was trained over two years ago), the training compute dedicated to these models has been roughly the same. Even the new updated Llama 3–405B with 3.8e25 compute effort, is “only” double that of GPT-4.

Then…Where is the feeling of stagnation coming from?

Happiness = Reality — Expectations

For months, we’ve seen impressive “demos” on X (Twitter) promising that robots will take our jobs and a swarm of agents will become substitutes for all company functions (what we internally call “Twitter demos”). This novelty effect has attracted a lot of attention and money.

However, reality is always more complicated. Production-grade applications depend on several factors that aren’t easily obtained. Companies require

1. Talent (scarce because no one has experience with this technology at scale before)

2. Reliability (from models that are stochastic — although just today openAI released structured output, a significant advancement on this)

3. Cost efficiency (which has come a long way but still makes inference expensive)

4. A clear use case (this is the one where most eyes are on)

All these factors combined test people’s patience, and when patience wears thin due to high expectations, the result is a pessimistic outlook.

Growth’s Non-Linear Nature

Another factor fueling pessimism is the difficulty in understanding the potential of AI models in a rapidly evolving environment. Even for those who believe in the continuation of scaling laws, understanding what the next generation of frontier models will achieve is very challenging.

The value in AI models (and we could argue that is the same in almost any technology wave) grows exponentially in two dimensions:

1. Performance over existing use cases. How good the model is doing an economically relevant task.

2. The number of use cases within the technology’s reach. To make matters more complicated, in genAI, we have new model capabilities (i.e., learn how to code, do the math, translate languages…) emerging unexpectedly as the model gets trained.

To try to make more visual the potential for exponential growth, I am going to share a mental image that helped me grasp the concept.

Imagine yourself at the center of a 2-D surface with scattered tasks. Tasks closer to you are easier to execute and have low value (i.e., correct a typo), while tasks further away are more complex and have high value (i.e., cure cancer). GPT-2 allowed you to handle everything within arm’s reach — correcting the next word, generating nonsensical sentences for brainstorming, etc. It wasn’t fancy, but it captured our imagination.

Then came GPT-3 and 3.5, extending our reach. Suddenly, the models could write code and correct entire documents. We moved from automating one-second tasks to handling five-second tasks. With GPT-4, the reach extended even further, automating more complex tasks that could take up to five minutes — like drafting emails, creating detailed reports, or generating marketing content.

This progression continues, and with each model, we see exponentially higher value and more automated tasks.

We’re now so far from our starting point (AI only a few years ago) that we are even struggling to see what will come next, what is the high value-added use case that GPT-7 will solve

Bringing this back to the concept of value… While we may not see a justification for the investment now, and forecasting may be difficult, an order of magnitude (OOM) increase in computing (happening as we speak) could unlock more than an OOM in value. And all this is without even start discussing an AI take off.

My argument is that the existence of such an incredible -but difficult to predict and imagine- potential can disrupt all economic calculations, which is why we see some people asking for trillions of dollars in investment and others calling for a bubble burst.

The million-dollar question

Do we need to go through a burst? Or can the world move directly into a deployment phase without an economically devastating collapse? Will Wall Street or OpenAI win?

“My old boss Marc Andreessen liked to say that every failed idea from the dotcom bubble would work now. It just took time; it took years to build out broadband. Consumers had to buy PCs. Retailers and big companies needed to build e-commerce infrastructure. A whole online ad business had to evolve and grow. And more fundamentally, consumers and businesses had to change their behavior.” — Benedict Evans

The reality is that no one knows, but what is essential is that one way or another, bubble or not, all the CapEx invested during the frenzy will still be there and be helpful in the future of AI, same as all the internet infrastructure was used post the .com bubble and the train tracks after the collapse of the railroad industry.

The Future: AI’s Deployment Phase

So… returning to Perez’s S-curve framework.

The framework concludes with the deployment phase. It’s the happy ending where technology permeates every facet of life, and society reaps the rewards. I do not doubt that AI will reach this stage.

Predicting whether we unlock AI’s full potential before or after the turning point is almost impossible, but we must remain optimistic. The transformative power of AI promises to revolutionize industries, improve daily lives, and drive unprecedented innovation.

The journey may be uncertain, but the destination holds immense promise.

When will we get to the AI deployment phase?

There are a few simple indicators to know whether we are in the deployment phase.

“Connecting a thermostat to the Internet wirelessly is awesome, but calling it an Internet-enabled thermostat will start to be like calling a vacuum cleaner an electricity-enabled broom.” — Jerry Neumann

We’ll reach an AI deployment phase when we start calling things by their name. Companies focused on models, kernels, or GPUs will be AI companies. The rest will simply be companies delivering great products powered by awesome AI technology.

There won’t be thousands of AI startups being born every day; there will simply be startups solving problems for their customers using AI.

Not every investor call of a public company will include an AI plan; instead, there will be improvements in productivity beyond imagination.

This has been Luzia’s approach almost from inception. We are a consumer assistant that outperforms Siri and Alexa because we leverage new technologies. But at the core, we aim to provide the best product to our users, regardless of the technology we use.

Our position is also strong no matter what happens. If there’s a CapEx reset, we’ve secured the funding to keep working on what matters — better products and better quality. Conversely, if scaling laws prevail, our users will benefit from more advanced products and enhanced utility. Win-Win for our loved users.

Luzia is a product-driven company with a user-centric focus, which is the right place to be no matter how the market shifts.

The only forecast I will make today is that, eventually, the rest of the industry will get there, too.